Fleiss Kappa

|

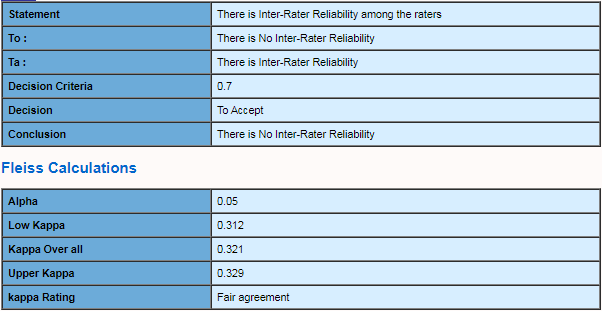

The Fleiss Kappa (Inter-Rater Reliability) is a

statistical measure for assessing the reliability of agreement between a

fixed number of raters when assigning categorical ratings to a number of

items or classifying items. The statistic is called Fleiss' kappa. This

contrasts with other kappas such as Cohen's kappa,

which only work when assessing the agreement between not more than two raters

or the inter-rater reliability for one appraiser versus themself. The measure

calculates the degree of agreement in classification over that which would be

expected by chance. |

|

Fleiss' kappa can be used with binary or nominal-scale. It

can also be applied to Ordinal data (ranked data). However, When you have

ordinal ratings, severity ratings on a scale of 1–5, Kendall's coefficients,

which account for ordering, are usually more appropriate statistics to

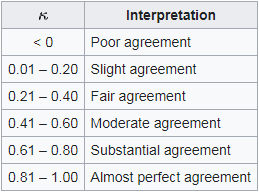

determine association than kappa alone. Landis and Koch (1977) gave the

following table for interpreting kappa

values. |

|

|

|

|

|

|

|

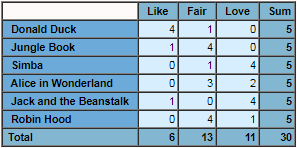

Inter-Rater Reliability allows you to: 1.

Enter Rater data 2.

Enter Category data 3.

Enter Inter-Rater data 4.

Calculate Reliability 5.

Print a report |